MIT physics professor Max Tegmark – also known as “Mad Max” for his unorthodox ideas ranging from artificial intelligence to the ultimate nature of reality – held the inspiring keynote session this year at Internetdagarna.

As Max Tegmark pointed out, there’s been much talk about AI disrupting the job market and enabling new weapons, but very few scientists talk seriously about the elephant in the room: what will happen once machines outsmart us at all tasks?

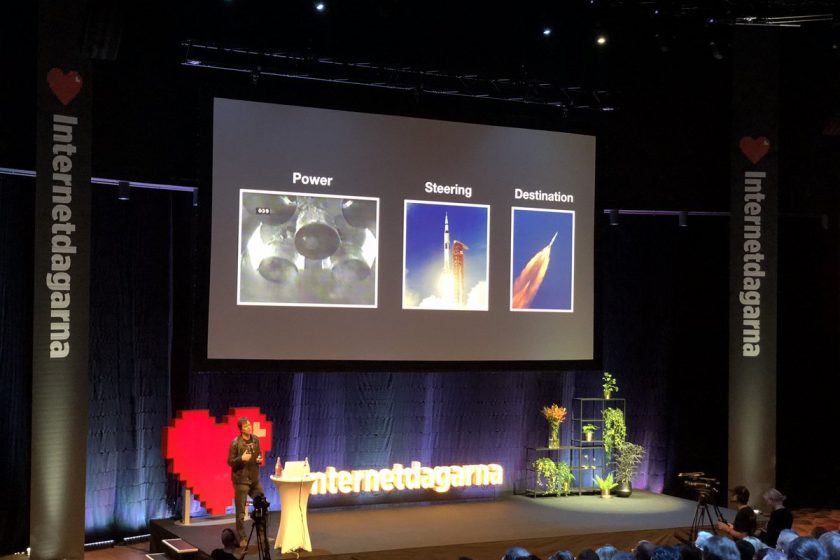

He made a comparison to a space journey, you need power, steering and destination, to accomplish this, as well as for AI development in the future, where intelligent machines that design and build new iterations of themselves represent a new form of ‘life’ (the ‘Life 3.0’ in the title of his appraised book “Life 3.0: Being Human in the Age of Artificial Intelligence”).

He made a comparison to a space journey, you need power, steering and destination, to accomplish this, as well as for AI development in the future, where intelligent machines that design and build new iterations of themselves represent a new form of ‘life’ (the ‘Life 3.0’ in the title of his appraised book “Life 3.0: Being Human in the Age of Artificial Intelligence”).

Max Tegmark meant that too much focus today is on ‘evil’ machines, or how AI can be used to create weapons etc. He cited Andrew Ng that “fearing a rise of killer robots is like worrying about overpopulation on Mars”. Instead, the important aspects are to align the machines with our goals and what we really should worry about is competence.

The more intelligent and powerful machines get, the more important it becomes that their goals are aligned with ours. As long as we build only relatively dumb machines, the question isn’t whether human goals will prevail in the end, but merely how much trouble these machines can cause humanity before we figure out how to solve the goal-alignment problem. If a superintelligence is ever unleashed, however, it will be the other way around: since intelligence is the ability to accomplish goals, a superintelligent AI is by definition much better at accomplishing its goals than we humans are at accomplishing ours, and will therefore prevail. In other words, the real risk with AGI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble. The time window during which you can load your goals into an AI may be quite short: the brief period between when it’s too dumb to get you and too smart to let you.

Max Tegmark then further explained the notions of AGI and Superintelligence by showing the “AI map” in the picture to the right.

Max Tegmark then further explained the notions of AGI and Superintelligence by showing the “AI map” in the picture to the right.

AGI stands for “artificial general intelligence” and refers to computers that have the capacity to demonstrate broad human-level intelligence, i.e. when AI machines matches human intelligence in all areas.

Superintelligence is when AI machines exceeds human intelligence, even the intelligence of the AI developers, in all areas.

Max Tegmarks advice to the audience was to tell their kids to avoid sea level professions, since these will already be occupied by AI by the time they will enter the job market.

In a recent panel discussion with some of the world’s masterminds all but one (Elon Musk) forecasted that Superintelligence will happen. Not now. But it will happen.

In a recent panel discussion with some of the world’s masterminds all but one (Elon Musk) forecasted that Superintelligence will happen. Not now. But it will happen.

With this possibility in the future, Max Tegmark meant that we should be thinking deeply about the societal implications of what we create and how we might ultimately design and build AI systems that reflect and respect our hopes and values.

“Let’s build AI that doesn’t overpower us, but empowers us”.

“Let’s build AI that doesn’t overpower us, but empowers us”.

It will be something of a wisdom raise though. Trial and error will not be a recommended method. Get it right the first time, since it might be the only time we get.

But if we get it right, we have every possibility to create a world that will be better for everyone.

Read all our reports from the AI track of Internetdagarna (in Swedish) here: